The Unstructured Data Problem

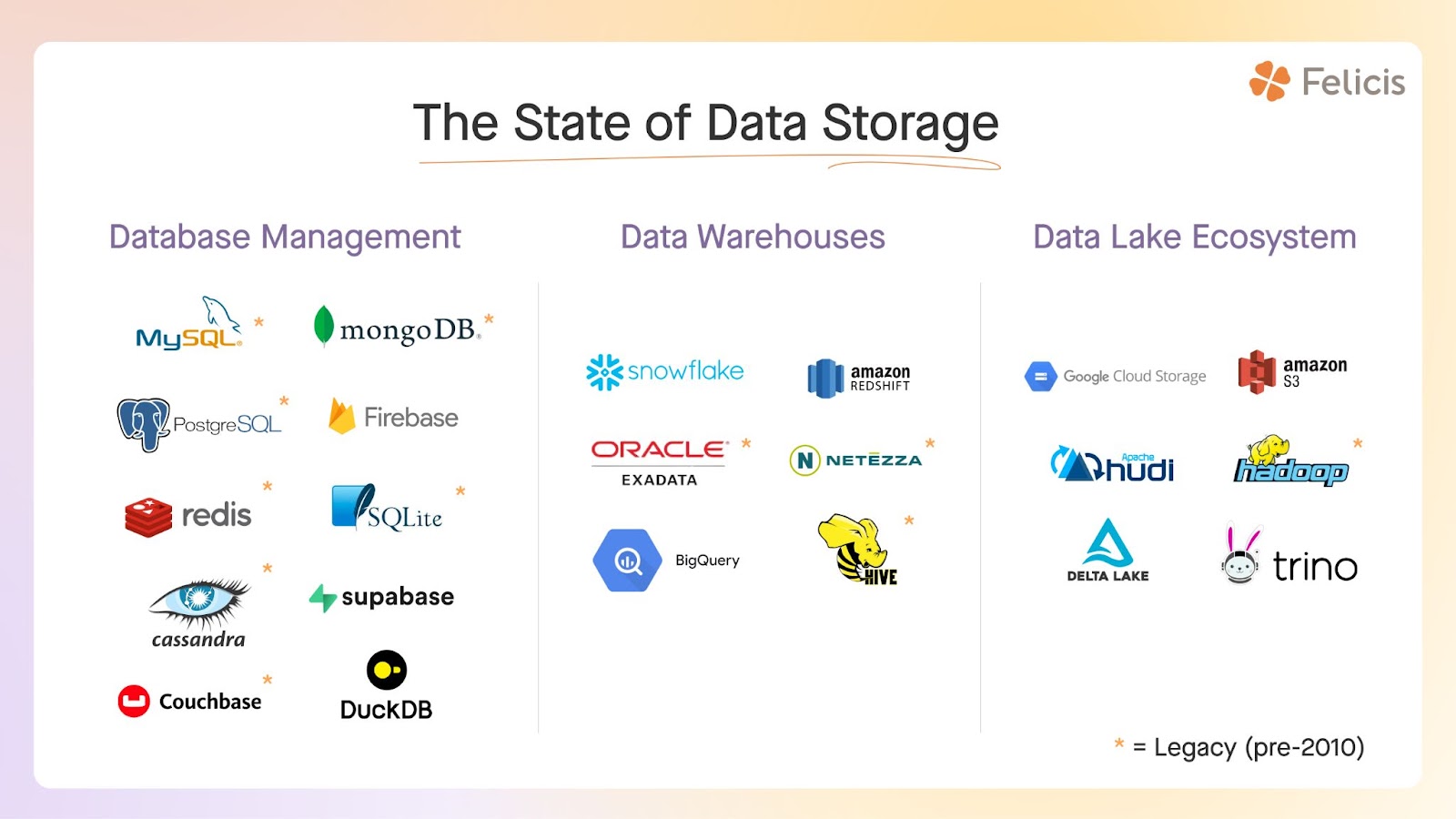

The current data management architecture just isn’t getting it done. As data volumes continue to grow, it’s well documented that companies are struggling to utilize this data at scale. Traditional data warehouses, built and optimized for large amounts of structured data, are ill-equipped for the new normal, in which data is generated by many different devices, at the edge, and is often unstructured.

Before detailing how data warehouses have come to dominate the storage and management of data, we must consider how data lakes address the limitations of these warehouses.

The truth is, the status quo of data management is in the midst of a long pivot and the next generation of data lakes represent a foundational shift in the future of data storage.

The movement towards unstructured data is a secular trend. This necessitates a new storage architecture – one that is modular – enabling end users to integrate storage, compute, and querying capabilities. Instead of all of your data in one place, you’ll have just the data you need wherever you need it.

But first, a little history

Data management represents the acquisition, storage, governance, and maintenance of data throughout its lifecycle within an organization. For scope, we’ll focus on data acquisition and storage. Traditionally, data has been stored in a data warehouse, a centralized repository built to store large volumes of structured data. This typically involves extracting data from the source system, transforming it into a suitable format, and loading it into the warehouse (ETL).

The primary purpose of a data warehouse is to enable efficient querying and analysis of historical and aggregated data, which helps companies make informed decisions based on insights gleaned from the data.

Intriguingly, the term, “data warehouse” was popularized in the early 90s to address the limitations of querying operational databases for analytical purposes. Warehouses introduced the concepts of ETL to go from data source to warehouse. The 2010s brought another shift as the advent of cloud computing revolutionized data warehousing. Cloud-based data warehouses could now offer scalable, cost-effective solutions for storing and analyzing data.

We believe there is another fundamental shift happening now with hybrid and distributed architectures, real-time analytics, and edge data generation. These innovations exacerbate the need

for more flexible, scalable solutions that can also adeptly handle unstructured data.

Enter the data lake

Data lakes emerged in the early 2010s with the prominence of big data. The original idea was to create a central repository capable of storing raw data in its native format. Hadoop, more specifically, Hadoop Distributed File System (‘HDFS’) emerged as one of the first options. Apache Spark followed, as did Databricks (who commercialized Spark), Cloudera, and Hortonworks. Recent entrants include Apache Hudi and Apache Iceberg as well. But what actually is a data lake?

A data lake consists of:

- Storage. Current options are: s3, Azure, GCP, Hadoop HDFS

- File format. Current options are: parquet, json, CSV, Avro

- Table format. Current options are: Iceberg, Hudi, Delta Lake

- A compute engine. Current options are: Starburst, Trino, Snowflake, Dremio, Clickhouse

Here’s how the lake architecture improves on the current status:

- Modularity. Currently, with data lakes, customers pick and choose what compute engine, file format, and storage back end. In a multi engine world, there is now portable compute, specific to your use case.

- Cost-savings. Warehouse costs scale linearly with warehouses. Data compaction offered by new entrants have vastly reduced the cost of storing large amounts of data.

- Convenience. Using SQL to query directly into databases wasn’t previously possible. Onehouse and Tabular have enabled this capability.

And to crystallize, the trends we believe that are propelling this are:

Unstructured data. With the growth of unstructured data expected to grow 10x by 2030, organizations need to think about how their data management strategy is set up for success. While data lakes accommodate a broader range of data types and organizations collect data from sources like social media, IoT devices, and text documents, data lakes must provide the flexibility to store and analyze this diverse data without requiring upfront schema design.

Scalability. Data lakes are designed to scale horizontally and are tailored to accommodate massive data volumes by distributing data across clusters of commodity hardware or cloud storage. This in turn allows businesses to handle big data without exorbitant costs.

Analytics, AI + ML use cases. The topic du jour. Raw or unstructured data enables data scientists and ML practitioners to more readily experiment and test the data for use in model training and inference. Organizations that are serious about using unstructured data to train foundation models. Then there is the issue that synthetic data creates, but that’s a subject for another post.

Who sails the data lakes?

Overall, businesses that handle diverse data sources, that have a need to analyze massive volumes of data, and seek to leverage advanced analytics and machine learning, can benefit significantly from adopting a data lake architecture.

Choose your fighter: Since Hadoop, a variety of companies have attempted to build commercial offerings of “DLaaS” (“Data Lake as a Service”) this including Hortonworks and Cloudera. Today, there are three primary options for data lakes – Delta Lake (Databricks), Apache Hudi (Onehouse), and Apache Iceberg (Tabular).

The three offerings can be contextualized like the big 3 in tennis – Federer, Nadal, and Djokovic. Allow a brief comparison (or tangent). All of these are open source projects aimed at improving the management and performance of data lakes. We can consider Tabular as Federer – the overall popularity, acceptance of the project is akin to the love and reverence of Federer (and that graceful one-handed backhand). Onehouse is making a big swing on real time streaming use cases – one can equate this to Nadal’s dominance on clay (and that gnarly forehand). Delta Lake is akin to Djokovic – a conglomeration of expertise across the board with the dominance and backing of Databricks (similar to how Djoker dominated pre-Alcaraz).

All tennis comparisons aside, there are some fundamental questions with respect to the future of data storage that present some of the largest opportunities:

- Can these managed service offerings, Onehouse (Hudi) and Tabular (Iceberg), extract value from their customers while maintaining a flexible, open approach? What is the killer use case that will resonate with customers?

- How quickly, and how much volume, is shifted away from traditional data warehouses?

- Can Hudi and Iceberg scale quickly enough to supersede an eventual new entrant technology or provider in the market?

Regardless of your table format preference, we believe data lakes will comprise a larger portion of storage in the future. We anticipate that data lake houses will follow a trajectory reminiscent of major relational databases which, as Onehouse pointed out, adheres to the Lindy effect, which states that the longer a technology has existed, the greater its likelihood of enduring into the future.

We’re still exploring these questions and many more. If you’re building in this space, we’d love to hear from you! Email us at data@felicis.com.