Context Engineering and Agent Infra

What Multi-Agent Systems Need To Function

The largest question that hangs above every developer’s head is: What will our AI stack look like in the future?

Software operation used to require a database and a GUI that needed human input, but this is changing as work becomes autonomous.

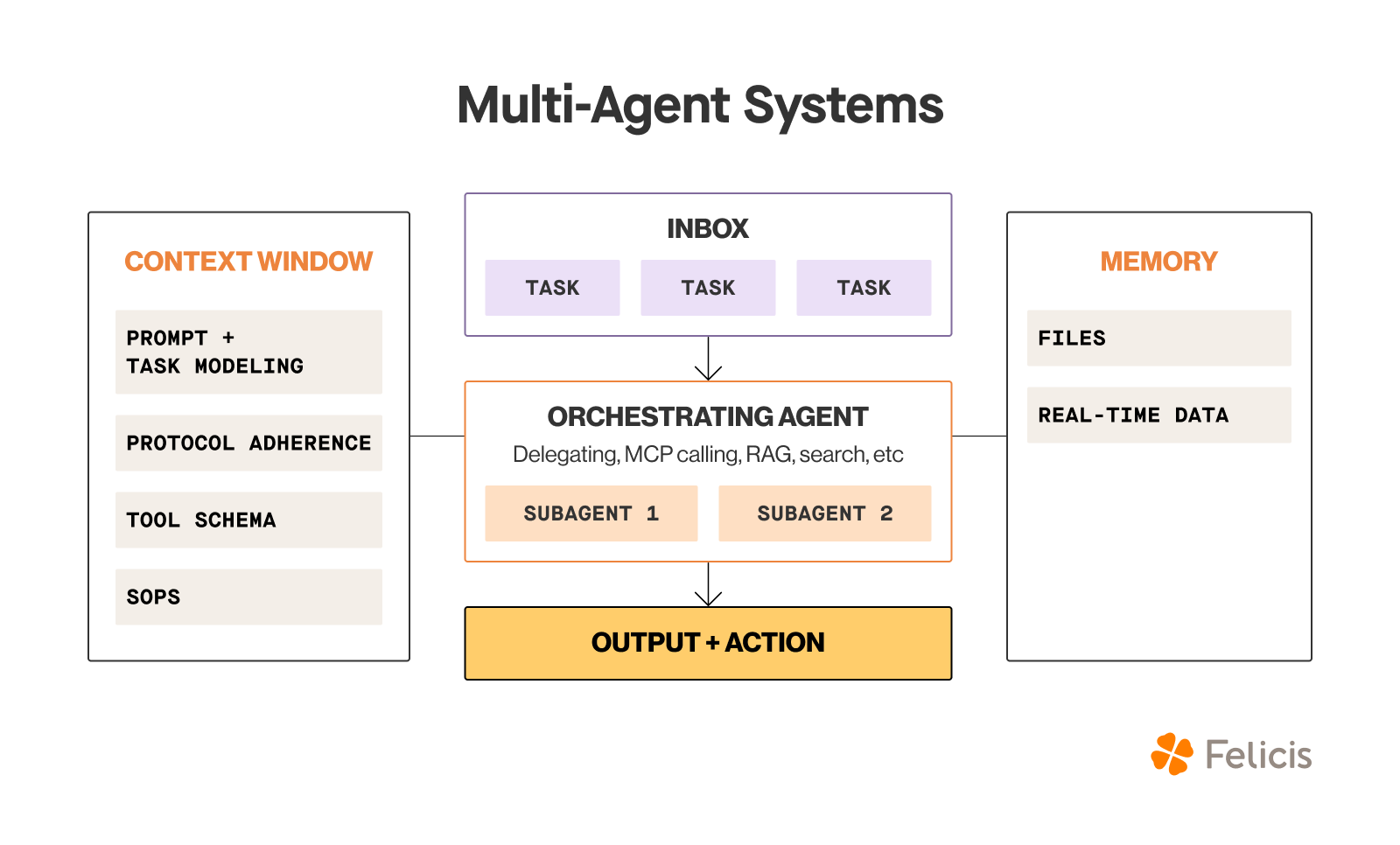

We’re entering a new era of agent infrastructure, one where the foundational primitives aren’t vector databases or orchestrators, but something more human: inboxes and SOPs – standard operating procedures.

Picture this: every autonomous agent has an inbox, just like we do. Tasks stream in from multiple sources – bug reports, Slack, APIs, human requests. The agent triages, prioritizes, and responds. It doesn’t need to be told what to do every time. Why? Because it has SOPs encoded as reusable behaviors. These SOPs are like macros for intelligent action. They evolve, get versioned, and learn.

But here’s the key enabler: context engineering.

Context engineering encompasses more than just prompting. It involves dynamically providing AI with the right information, like data and tools, in the ideal structure at runtime, to fully equip a model to carry out a specific task. We’re not just stuffing prompts with static facts anymore. Context is dynamic, structured, and personalized. It’s not just what the agent knows – it’s how and when it knows it. Context engineering means that the tasks an agent needs to do should be constantly re-ranked. Think of it as DevOps for cognition. Tools and platforms will emerge that help teams design, store, recall, test, and deploy “context modules” the same way they ship code.

“Context engineering is the delicate art and science of filling the context window with just the right information for the next step.”

This is a radical shift. Context engineering involves running additional steps before the final model call, like retrieval from a knowledge base, tool selection, task modeling, and protocol adherence. Instead of infrastructure that focuses on pipelines, there will be infrastructure that helps plan how to complete the overall task. Less LLM as an oracle, more LLM as your friendly ops teammate.

Context engineering aims to strike a balance by providing only the most relevant context to the model. LLMs have a limited context window, so choosing what to put into that window is critical. Conversely, adding too much irrelevant or poorly structured information can worsen a model's output quality (a phenomenon known as being “lost in the middle” or experiencing “context rot” as the prompt grows too large). Context engineering is about maximizing the effective information density of the prompt.

In our fast-approaching future, agents won’t be viewed as chatbots. And you won’t use just one. Through context engineering, agents will become process owners that orchestrate a fleet of subagents. They’ll have an inbox of tasks to manage, a playbook to follow, and a continuously improving understanding of what matters and why. A planning agent will access the inbox and provide the appropriate context for specific subagents.

To be clear, we’re far away from this agentic reality. There are significant barriers to adoption – data is still a major bottleneck for agent capability. Benchmarks for computer use are still low (38.1% on OSWorld, 58.1% WebArena, 87% WebVoyager). Frontier labs like OpenAI and Anthropic are expected to spend heavily to improve these. Venture-backed startups like Browserbase, Browser Use, Letta, n8n, and others are pushing the boundaries of what agent-first infrastructure can look like.

However, the prize is large enough – OpenAI projects that agent sales will eclipse ChatGPT by 2030. And it makes sense, why would developers want to spend time chaining several prompts together when an agent with the right context engineering can operate more pragmatically?

We know the primitives are changing. But what we might not have been expecting is that the future of automation looks a lot more like people just faster, tireless, and programmable.

If you’re building for this world run by context engineering, please have your agent call my agent.

Thanks to Astasia Myers, Devansh Jain, Andrew Millich, and Eric Deng for their input on this post.