I AM Broken: Why Traditional Identity & Access Management Fails in an Agent-First World

The adoption of AI agents is creating a fundamental mismatch with established Identity and Access Management (IAM) systems.

Traditional IAM systems assume deterministic actors executing repeatable, predictable workflows. But AI agents are, by design, non-deterministic. Applying a static security model to a dynamic system creates an unacceptable level of risk.

Deploying agents safely requires moving beyond static roles and scopes toward a new authorization model based on dynamic, context-aware, and ephemeral permissions. This is a necessary architectural shift. The core of the issue is the widening gap between the nature of the actor and the design of the identity system:

The Mismatch: Deterministic Security vs. Non-Deterministic Actors

Traditional IAM, including Role-Based Access Control (RBAC) and OAuth scopes, operates on a simple principle: you define a role or scope with a fixed set of permissions, and you assign it to a user or service. This works because the actors—whether a human following a business process or a script executing hardcoded logic—are predictable. Their potential actions are finite and known ahead of time.

LLM-driven agents break this assumption. Their behavior is emergent, guided by a goal, context, and runtime data. There is no predefined script or finite-state machine; the execution path is generated in real time.

Assigning broad, static permissions to an inherently unpredictable entity poses a significant security liability.". The principle of least privilege is nearly impossible to maintain when the "privilege" required for a task is unknown until the moment of execution.

A Concrete Example: Separation of Duties in an Agentic Workflow

Consider a manager who can both submit expense reports and approve reports from their direct reports. An AI agent acting on this manager's behalf would inherit the permissions for both actions: submit_expense and approve_expense.

Now, consider this workflow:

- The manager asks the agent: "File my expense report for my recent trip and get it approved."

- The agent, using its

submit_expensepermission, creates and submits the report. - To satisfy the second part of the prompt ("get it approved"), the agent immediately uses its

approve_expensepermission on the very report it just submitted.

This violates the principle of Separation of Duties (SoD), a foundational security control. In a traditional system, you might prevent this with complex, application-level business logic that checks if the submitter and approver are the same. This approach is brittle, as it places the full security burden on every application developer to implement correctly.

A robust security model should not permit this at the identity level. The agent should never have the power to both submit and approve the same entity. The context of the first action (submitting) should dynamically alter the permissions available for the second action. This is just one type of authorization vulnerability that several vibe-coding platforms face today.

The Path Forward: Principles for Agent-Native Authorization

To secure agents, we need to evolve authorization from a static gate to a dynamic, intelligent layer. This model is built on three core principles.

1. Just-in-Time (JIT) and Scoped-Down Permissions

Standing permissions are the primary risk. Instead of granting an agent a role, we should provision permissions ephemerally, for a specific purpose, and at the last possible moment.

- How it works: An agent begins with zero or minimal permissions. When it needs to perform a task (e.g., read a file), it requests that specific permission. A runtime authorization system evaluates the request and grants a short-lived credential if approved. Companies like ConductorOne are already pioneering this for human access with Just-in-Time (JIT) permissions and a natural extension to this paradigm is to facilitate it for agents assuming human identities.

2. Context-Aware Access Control

Permissions should not be based on a static role, but on the real-time context of the request. This is an evolution of Attribute-Based Access Control (ABAC), designed for the speed and variability of agentic workflows.

- How it works: Permissions should look for context signals, including:

- Intent: What is the agent's stated goal? (e.g., "summarize document," vs "delete repository").

- History: What actions has the agent taken in this session and in the past?

- Data Sensitivity: Is the requested resource tagged as PII, financial data, or strategic IP?

- Environment: What is the time of day, geographic location, and device posture?

This 'policy-as-code' approach, championed by companies like Oso, provides the developer-centric building blocks for defining and enforcing such rich, context-aware rules.

3. Continuous and Behavior-Based Verification

Authentication and authorization should not be a one-time event at the start of a session. The system must continuously verify that the agent's actions align with its authorized intent.

- How it works: The authorization layer monitors the agent's stream of actions (its "behavior"). If the behavior deviates significantly from the initial intent or established patterns—for example, an agent tasked with summarizing code suddenly attempts to access production secrets—its permissions can be instantly downgraded or revoked, pending human review. This creates a crucial security feedback loop that is missing from today's models.

Companies like Keycard and Anysource are early platforms offering this continuous verification, making it easier for teams to trust their agents.

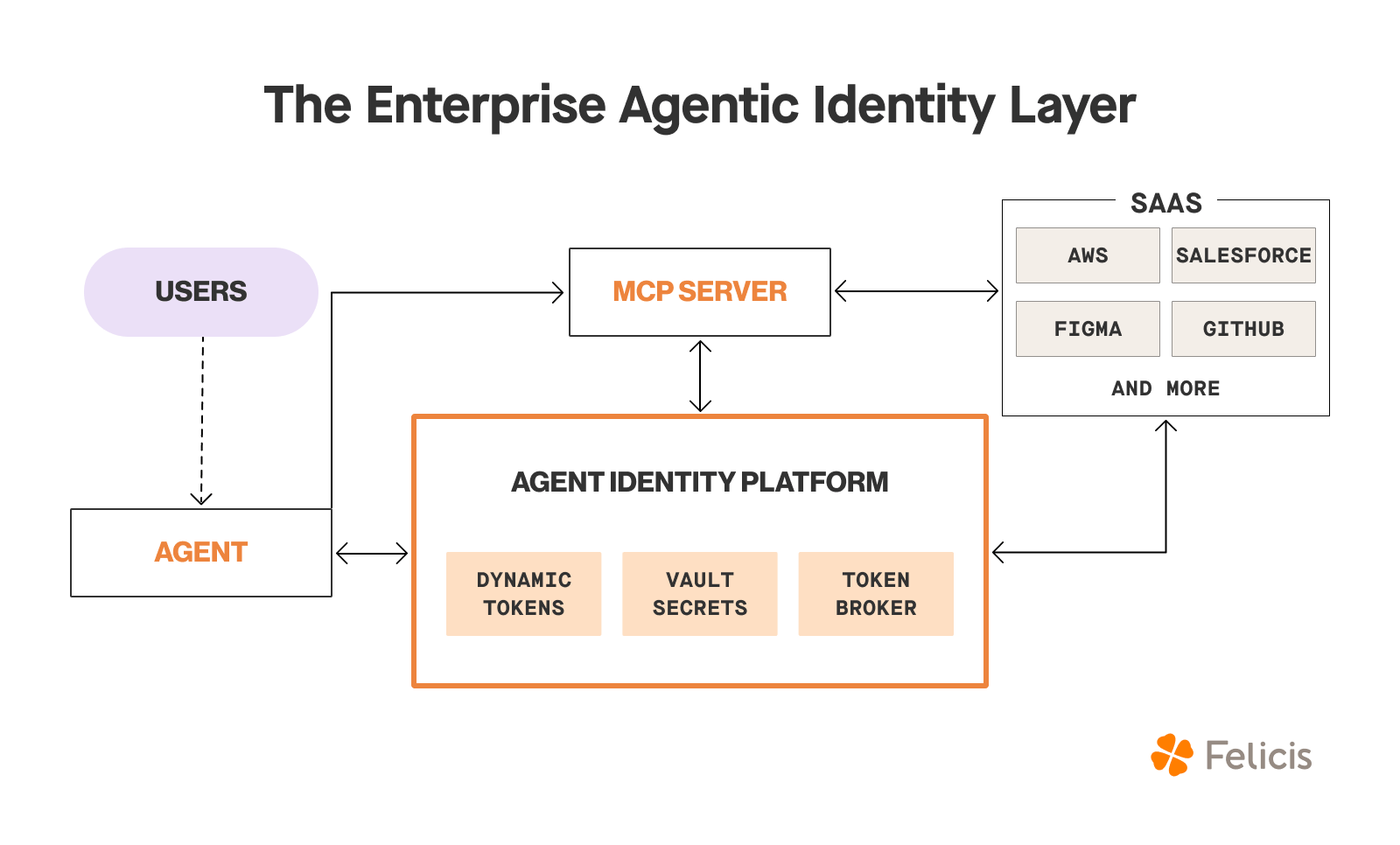

Ultimately, where these three principles will lead us is to a layer that (1) unifies credentials and the means to obtain them and (2) ensures the least privilege for access. This gives control back to enterprises, which will increase trust in the agents they build and work with.

Building for Non-Determinism

AI agents are a new class of identity with unique properties that demand a new security model. Continuing to apply static roles and permissions is neither a sustainable nor a safe path. The next generation of IAM must be built for non-determinism. By embracing dynamic, just-in-time, and context-aware authorization, we can build the necessary guardrails to deploy agentic systems safely and confidently.

If you’re building platforms to help with agentic identity and authorization, we’d love to meet.